Temporal

From the docs:

Temporal is a scalable and reliable runtime for Reentrant Processes called Temporal Workflow Executions.

A Temporal Workflow Execution is a durable, reliable, and scalable function execution. It is the main unit of execution of a Temporal Application.

Each Temporal Workflow Execution has exclusive access to its local state. It executes concurrently to all other Workflow Executions, and communicates with other Workflow Executions through Signals and the environment through Activities. While a single Workflow Execution has limits on size and throughput, a Temporal Application can consist of millions to billions of Workflow Executions.

After going through the docs and examples, it isn't so different from celery. It definitely has more features and is far more advanced.

Before reading further, please skim through this page: https://docs.temporal.io/application-development/features

Let's dive right into the first example.

Polling example

https://github.com/temporalio/samples-python/blob/main/polling/

@workflow.defn

class GreetingWorkflow:

@workflow.run

async def run(self, name: str) -> str:

return await workflow.execute_activity(

compose_greeting,

ComposeGreetingInput("Hello", name),

start_to_close_timeout=timedelta(seconds=2),

retry_policy=RetryPolicy(

backoff_coefficient=1.0,

initial_interval=timedelta(seconds=60),

),

)

There are three ways how to perform polling. To be quite honest, we already do something similar with celery.

- Infrequent polling: retry with backoff, we already do this.

- Frequent polling: similar to 1), only the frequency is different

- Periodic sequence: similar to 2), except they poll in a child workflow

Polling and retry works similarly to celery.

Dynamic execution

This is where we'd really need help. In Packit we always have two inputs:

- Configuration (packit.yaml)

- Event

And off these two we construct a chain of tasks. That would be a Temporal Workflow.

With celery, creating tasks is okay, but very dynamic. We struggle with the first part: how to efficiently get from those 2 inputs to the series of tasks. Once we're in handlers, things are good.

Where can temporal help?

- Signals: sending data to active workflows, example

- Workflows and activities: https://github.com/temporalio/samples-python/blob/main/hello/hello_parallel_activity.py

Every new event would spawn a new workflow. That would somewhat resemble our pipeline. Celery tasks seem equivalent to activities.

Signals: I can't see how they would be useful in our workflow. We can just update values in database if we need communication between tasks.

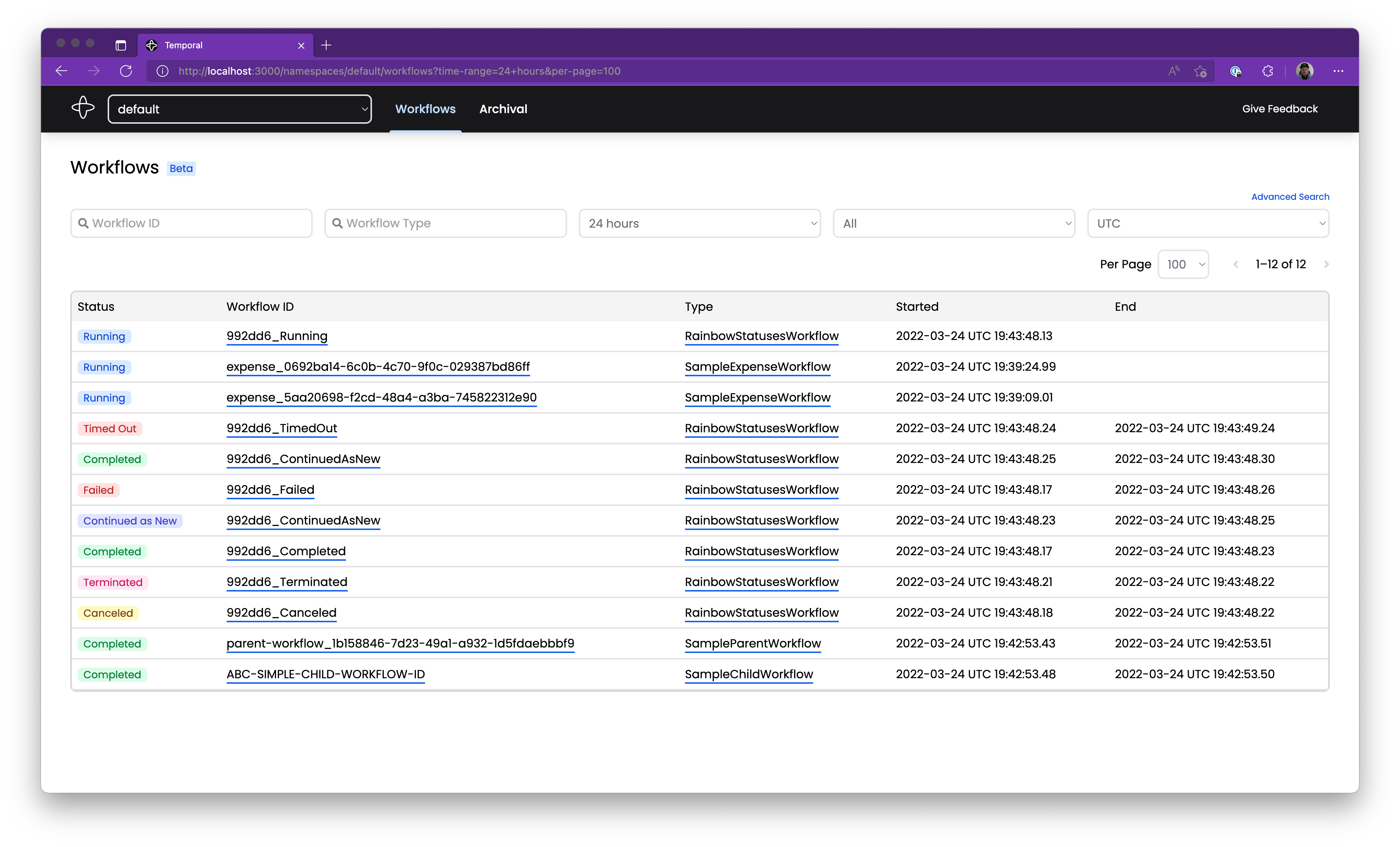

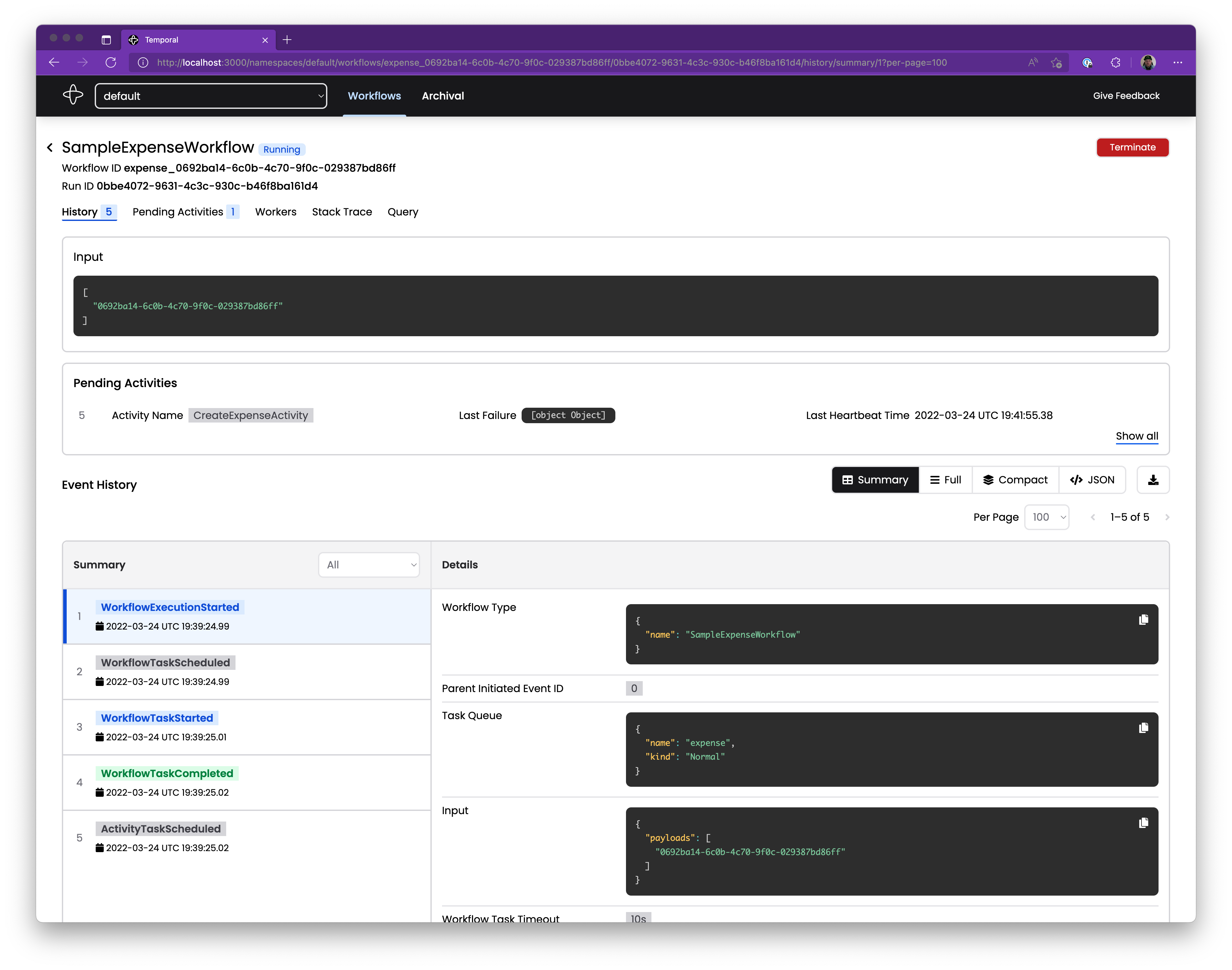

UI

The UI looks like the biggest Temporal benefit. The introspection is just amazing.

Deployment

It's hard to tell what is the best deployment of temporal: https://docs.temporal.io/cluster-deployment-guide#elasticsearch

Example docker-compose with psql

But overall looks to be more complex than celery.

Conclusion

Temporal offers richer workflow engine over celery. It wouldn't be trivial to port our solution from celery to temporal.

Except for the UI, I don't see a big advantage in temporal. Hunor has ideas how we can improve processing of events. That sounds like a time better spent rather than migrating to a new platform.